Agents are extremely impressive, but they also introduce a lot of non-determinism,

and non-determinism means sometimes weird things happen.

To combat that, we've needed to instrument our workflows to make it possible to

debug why things are going wrong.

This is part of the Building an internal agent series.

Why logging matters

Whenever an agent does something sub-optimal, folks flag it as a bug.

Often, the “bug” is ambiguity in the prompt that led to sub-optimal tool usage.

That makes me feel better, but it doesn't make the folks relying on these tools

feel any better: they just expect the tools to work.

This means that debugging unexpected behavior is a significant part of rolling out agents internally,

and it's important to make it easy enough to do it frequently.

If it takes too much time, effort or too many permissions,

then your agents simply won't get used.

How we implemented logging

Our agents run in an AWS Lambda, so the very first pass at logging

was simply printing to standard out to be captured in the Lambda's logs.

This worked OK for the very first steps, but also meant that I had to

log into AWS every time something went wrong, and even many engineers

didn't know where to find logs.

The second pass was creating the #ai-logs channel, where every workflow

run shared its configuration, tools used, and a link to the AWS URL where

logs could be found. This was a step up, but still required a bunch of

log spelunking to answer basic questions.

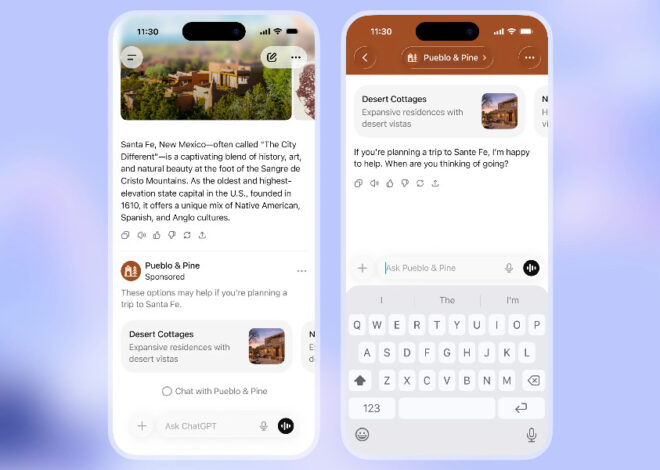

The third pass, which is our current implementation, was integrating

Datadog's LLM Observability

which provides an easy to use mechanism to view each span within the LLM

workflow, making it straightforward to debug nuanced issues without

digging through a bunch of logs. This is a massive improvement.

It's also worth noting that the Datadog integration also made it easy to

introduce dashboarding for our internal efforts, which has been a very

helpful, missing ingredient to our work.

How is it working? / What's next?

I'll be honest: the Datadog LLM observability toolkit is just great.

The only problem I have at this point is that we mostly constrain Datadog accounts

to folks within the technology organization, so workflow debugging isn't very

accessible to folks outside that team. However, in practice there are very

few folks who would be actively debugging these workflows who don't already

have access, so it's more of a philosophical issue than a practical one.