Whenever a new pull request is submitted to our agent’s GitHub repository,

we run a bunch of CI/CD operations on it.

We run an opinionated linter, we run typechecking, and we run a bunch of unittests.

All of these work well, but none of them test entire workflows end-to-end.

For that end-to-end testing, we introduced an eval pipeline.

This is part of the Building an internal agent series.

Why evals matter

The harnesses that run agents have a lot of interesting nuance, but they’re

generally pretty simple: some virtual file management, some tool invocation,

and some context window management.

However, it’s very easy to create prompts that don’t work well, despite the

correctness of all the underlying pieces.

Evals are one tool to solve that, exercising your prompts and tools together

and grading the results.

How we implemented it

I had the good fortune to lead Imprint’s implementation of

Sierra for chat and voice support, and

I want to acknowledge that their approach has deeply informed

my view of what does and doesn’t work well here.

The key components of Sierra’s approach are:

- Sierra implements agents as a mix of React-inspired code that provide tools and progressively-disclosed context,

and a harness runner that runs that software. - Sierra allows your code to assign tags to conversations such as

“otp-code-sent” or “lang-spanish” which can be used for filtering conversations,

as well as other usecases discussed shortly. - Every tool implemented for a Sierra agent has both a true and a mock implementation. For example, for a tool that

searches a knowledge base, the true version would call its API directly,

and the mock version would return a static (or locally generated) version

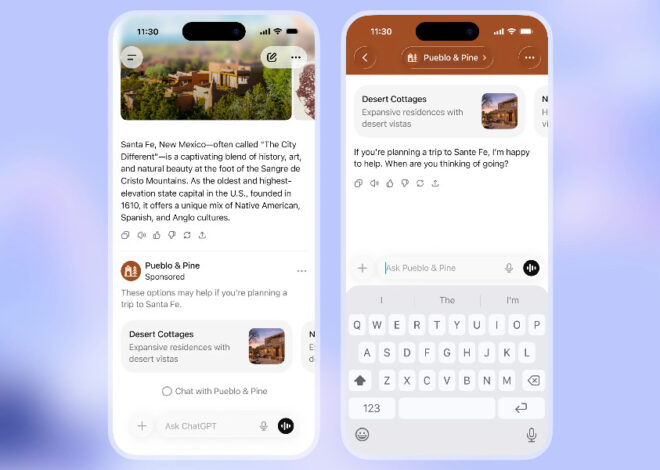

for use in testing. - Sierra names their eval implementation as “simulations.” You can create any number of simulations

either in code or via the UI-driven functionality. - Every evaluation has an initial prompt, metadata about the situation that’s available to

the software harness running the agent, and criteria to evaluate whether an evaluation succeeds. - These evaluation criteria are both subjective and objective.

The subjective criteria are “agent as judge” to assess whether certain conditions were met (e.g. was the response friendly?).

The objective criteria are whether specific tags (“login-successful”) were, or were not (“login-failed”) added to a conversation.

Then when it comes to our approach, we basically just reimplemented that approach

as it’s worked well for us. For example, the following image is the configuration for an eval we run.

Then whenever a new PR is opened, these run automatically along with our other automation.

While we largely followed the map laid out by Sierra’s implementation,

we did diverge on the tags concept.

For objective evaluation, we rely exclusively on tools that are, or are not, called.

Sierra’s tag implementation is more flexible, but since our workflows are predominantly

prompt-driven rather than code-driven, it’s not an easy one for us to adopt

Altogether, following this standard implementation worked well for us.

How is it working?

Ok, this is working well, but not nearly as well as I hoped it would.

The core challenge is the non-determinism introduced by these eval tests,

where in practice there’s very strong signal when they all fail, and strong

signal when they all pass, but most runs are in between those two.

A big part of that is sloppy eval prompts and sloppy mock tool results,

and I’m pretty confident I could get them passing more reliably

with some effort (e.g. I did get our Sierra tests almost always passing

by tuning them closely, although even they aren’t perfectly reliable).

The biggest issue is that our reliance on prompt-driven workflows rather

than code-driven workflows introduces a lot of non-determinism,

which I don’t have a way to solve without the aforementioned prompt

and mock tuning.

What’s next?

There are three obvious follow ups:

- More tuning on prompts and mocked tool calls to make the evals more probabilistically reliable

- I’m embarrassed to say it out loud, but I suspect we need to retry

failed evals to see if they pass e.g. “at least once in three tries”

to make this something we can introduce as a blocking mechanism in our CI/CD - This highlights the general limitation of LLM-driven workflows, and I suspect that

I’ll have to move more complex workflows away from LLM-driven workflows to get them

to work more consistently

Altogether, I’m very glad that we introduced evals, they are an essential mechanism for us

to evaluate our workflows, but we’ve found them difficult to consistently operationalize

as something we can rely on as a blocking tool rather than directionally relevant context.