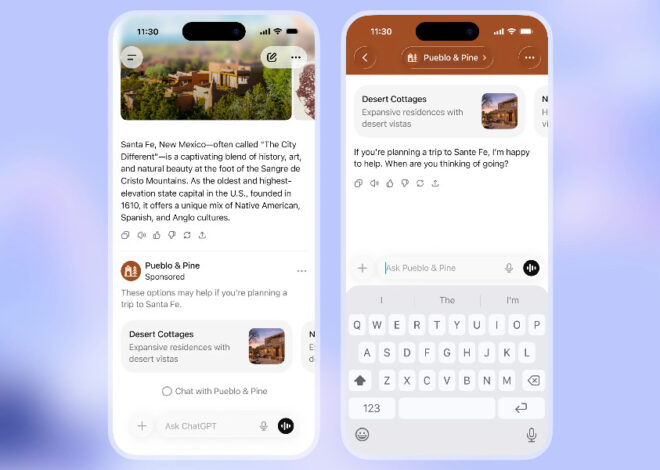

One of the most useful initial extensions I made to our workflows was injecting

associated images into the context window automatically, to improve the quality

of responses to tickets and messages that relied heavily on screenshots.

This was quick and made the workflows significantly more powerful.

More recently, there are a number of workflows attempting to operate on large

complex files like PDFs or DOCXs, and the naive approach of shoving them into

the context window hasn’t worked particularly well.

This post explains how we’ve adapted the principle of progressive disclosure

to allow our internal agents to work with large files.

This is part of the Building an internal agent series.

Large files and progressive disclosure

Progressive disclosure

is the practice of limiting what is added to the context window to the minimum necessary amount,

and adding more detail over time as necessary.

A good example of progressive disclosure is how agent skills are implemented:

- Initially, you only add the description of each available skill into the context window

- You then load the

SKILL.mdon demand - The

SKILL.mdcan specify other files to be further loaded as helpful

In our internal use-case, we have skills for JIRA formatting, Slack formatting, and Notion formatting.

Some workflows require all three, but the vast majority of workflows require at most one of these skills,

and it’s straightforward for the agent to determine which are relevant to a given task.

File management is a particularly interesting progressive disclosure problem, because files are so

helpful in many scenarios, but are also so very large. For example,

requests for help in Slack are often along the lines of “I need help with this login issue

which is impossible to solve without including that image into the context window.

In other workflows, you might want to analyze a daily data export in a very large PDF which is 5-10MB as

a PDF, but only 10-20kb of tables and text when extracted from the PDF.

This gets even messier when the goal is to compare across multiple PDFs, each of which is quite large.

Our approach

Our high-level approach to the large-file problem is as follows:

-

Always include metadata about available files in the prompt, similar to the list of available skills.

This will look something like:Files: - id: f_a1 name: my_image.png size: 500,000 preloaded: false - id: f_b3 name: ...The key thing is that each

idis a reference that the agent is able to pass

to tools. This allows it to operate on files without loading their context into

the context window. -

Automatically preload the first N kb of files into the context window,

as long as they are appropriate mimetypes for loading (png, pdf, etc).

This is per-workflow configurable, and could be set as low as0

if a given workflow didn’t want to preload any files.I’m still of mixed minds whether preloading is worth doing,

as it takes some control away from the agent. -

Provide three tools for operating on files:

load_file(id)loads an entire file into the context windowpeek_file(id, start, stop)loads a section of a file into the context windowextract_file(id)transforms PDFs, PPTs, DOCX and so on into simplified textual versions

-

Provide a

large_filesskill which explains how and when to use the above tools

to work with large files. Generally, it encourages usingextract_fileon any PDF, DOCX or PPT

file that it wants to work with, and otherwise loading or peeking depending on the available space

in the context window

This approach was quick to implement, and provides significantly more control

to the agent to navigate a wide variety of scenarios involving large files.

It’s also a good example of how the “glue layer” between LLMs and tools is actually

a complex, sophisticated application layer rather than merely glue.

How is this working?

This has worked well. In particular, one of our internal workflows oriented around

giving feedback about documents attached to a ticket, in comparison to other

similar, existing documents. The workflow simply did not work at all

prior to this approach, and now works fairly well without workflow-specific

support for handling these sorts of large files,

because the large_files skill handles that in a reusable fashion without

workflow authors being aware of it.

What next?

Generally, this feels like a stand-alone set of functionality that doesn’t

require significant future investment, but there are three places where

we will need to continue building:

- Until we add sub-agent support, our capabilities are constrained.

In many cases, the ideal scenario of dealing with a large file is opening it in a sub-agent

with a large context window, asking that sub-agent to summarize its contents,

and then taking that summary into the primary agent’s context window. - It seems likely that

extract_fileshould be modified to return a referencable, virtualfile_id

that is used withpeek_fileandload_filerather than returning contents directly.

This would make for a more robust tool even when extracting from very large files.

In practice, extracted content has always been quite compact. - Finally, operating within an AWS Lambda requires pure Python packages, and ultimately

pure Python is not very fast at parsing complex XML-derived document formats like DOCX.

Ultimately, we could solve this by adding a layer to our lambda with thelxml

dependencies in it, and at some point we might.

Altogether, a very helpful extension for our internal workflows.