Introducing Mistral 3. Four new models from Mistral today: three in their “Ministral” smaller model series (14B, 8B, and 3B) and a new Mistral Large 3 MoE model with 675B parameters, 41B active.

All of the models are vision capable, and they are all released under an Apache 2 license.

I’m particularly excited about the 3B model, which appears to be a competent vision-capable model in a tiny ~3GB file.

Xenova from Hugging Face got it working in a browser:

@MistralAI releases Mistral 3, a family of multimodal models, including three start-of-the-art dense models (3B, 8B, and 14B) and Mistral Large 3 (675B, 41B active). All Apache 2.0! 🤗

Surprisingly, the 3B is small enough to run 100% locally in your browser on WebGPU! 🤯

You can try that demo in your browser, which will fetch 3GB of model and then stream from your webcam and let you run text prompts against what the model is seeing, entirely locally.

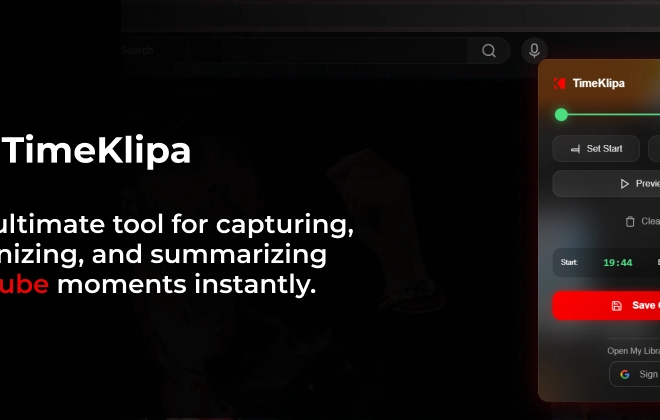

Mistral’s API hosted versions of the new models are supported by my llm-mistral plugin already thanks to the llm mistral refresh command:

$ llm mistral refresh

Added models: ministral-3b-2512, ministral-14b-latest, mistral-large-2512, ministral-14b-2512, ministral-8b-2512

I tried pelicans against all of the models. Here’s the best one, from Mistral Large 3:

And the worst from Ministral 3B: